Axis Communications: H.264 video compression standard provides new video surveillance opportunities

Axis Communications: H.264 video compression standard provides new video surveillance opportunities. The latest video compression standard, H.264 (also known as MPEG-4 Part 10/AVC ...

Axis Communications: H.264 video compression standard provides new video surveillance opportunities. The latest video compression standard, H.264 (also known as MPEG-4 Part 10/AVC (for Advanced Video Coding), is expected to become the video standard of choice in the coming years. H.264 is an open, licensed standard that supports the most efficient video compression techniques available today without compromising image quality. An H.264 encoder can reduce the size of a digital video file by more than 80 % compared to the Motion JPEG format and as much as 50 % compared to the MPEG-4 Part 2 standard. Much less network bandwidth and storage space are required for a video file or, seen another way, much higher video quality can be achieved for a given bit rate.

H.264 represents a huge step forward in video compression technology. It enables better compression efficiency due to more accurate prediction capabilities, as well as improved resilience to errors. It provides new opportunities for creating better video encoders that provide higher quality video streams, higher frame rates and higher resolutions at maintained bit rates (compared with previous standards) or, conversely, the same quality video at lower bit rates.

H.264 is the first time that the ITU, ISO and IEC standardization organizations in the telecommunications and IT industries have come together on a common, international standard for video compression. H.264 is the name used by ITU-T, while ISO/IEC has named it MPEG-4 Part 10/AVC since it is presented as a new part of its MPEG-4 suite.

This includes MPEG-4 Part 2, which is a standard that has been used by IPbased video encoders and network cameras. It is expected to be more widely adopted than previous standards, has already been introduced in mobile phones and digital video players, and has gained fast acceptance by end users. Service providers such as online video storage and telecommunications companies are also beginning to adopt H.264.

Due to its flexibility, H.264 has already been applied in high-definition DVD (e.g. Blu-ray), digital video broadcasting including high-definition TV, online video storage (e.g. YouTube), third-generation mobile telephony, in software such as QuickTime, Flash and Apple Computer’s MacOS X operating system, and in home video game consoles such as PlayStation.

Development of H.264

Designed to address several weaknesses in previous video compression standards, H.264 delivers on its goals of supporting:

- implementations that deliver an average bit rate reduction of 50%, given a fixed video quality compared with any other video standard

- error robustness so that transmission errors over various networks are tolerated

- low latency capabilities and better quality for higher latency

- straightforward syntax specification that simplifies implementations

- exact match decoding, which defines how numerical calculations are to be made by a codec to avoid errors from accumulating

H.264 also has the flexibility to support a wide variety of applications with very different bit rate requirements. In entertainment video applications like broadcast, satellite, cable and DVD, H.264 will deliver a performance of between 1 to 10 Mbit/s with high latency, or below 1 Mbit/s with low latency for telecom services.

How Video Compression Works

Video compression reduces and removes redundant video data so that a digital video file can be effectively sent and stored. An algorithm is applied to the source video to create a compressed file that is transmitted or stored. An inverse algorithm is applied to the compressed file to produce a video that shows virtually the same content as the original source video.

The more advanced the compression algorithm, the higher the latency, given the same processing power. Different video compression standards utilize different methods of reducing data, and hence, the results differ in bit rate, quality and latency. A decoder, unlike an encoder, must implement all the required parts of a standard in order to decode a compliant bit stream because a standard specifies exactly how a decompression algorithm should restore every bit of a compressed video.

Figure 5 provides a bit rate comparison, given the same level of image quality, among the following video standards: Motion JPEG, MPEG-4 Part 2 (no motion compensation), MPEG-4 Part 2 (with motion compensation) and H.264 (baseline profile).

H.264 Profiles and Levels

The joint group involved in defining H.264 focussed on creating a simple and clean solution, limiting options and features to a minimum. An important aspect of the standard is that H.264 has eleven levels (performance classes or degrees of capability) to limit bandwidth and memory requirements and also performance for resolutions ranging from QCIF to HDTV and beyond.

Seven profiles (sets of algorithmic features), that each target a specific class of applications and define what feature set the encoder may use, optimally support popular productions and common formats. Network cameras and video encoders will most likely use a profile called the baseline profile, which is intended primarily for applications with limited computing resources.

The baseline profile is the most suitable given the available performance in a real-time encoder that is embedded in a network video product. The profile also enables low latency, which is an important requirement of surveillance video and also particularly important in enabling real-time, pan/ tilt/zoom (PTZ) control in PTZ network cameras.

Understanding Frames

Depending on the H.264 profile, different types of frames such as I-frames, P-frames and B-frames, may be used by an encoder.

An I-frame, or intra frame, is a self-contained frame that can be independently decoded without any reference to other images. The first image in a video sequence is always an I-frame, and it can be a starting points for new viewers or a resynchronization point if the transmitted bit stream is damaged. I-frames can be used to implement fast-forward, rewind and other random access functions. An encoder will automatically insert I-frames at regular intervals or on demand if new clients are expected to join in viewing a stream. The drawback of I-frames is that they consume much more bits, but on the other hand, they do not generate many artifacts.

A P-frame, which stands for predictive inter frame, makes references to parts of earlier I and/ or P frame(s) to code the frame. P-frames usually require fewer bits than I-frames, but a drawback is that they are very sensitive to transmission errors because of the complex dependency on earlier P and I reference frames.

A B-frame, or bi-predictive inter frame, is a frame that makes references to both an earlier reference frame and a future frame. In the H.264 baseline profile, only I- and P-frames are used. This profile is ideal for network cameras and video encoders since low latency is achieved because B-frames are not used.

Basic Methods of Reducing Data

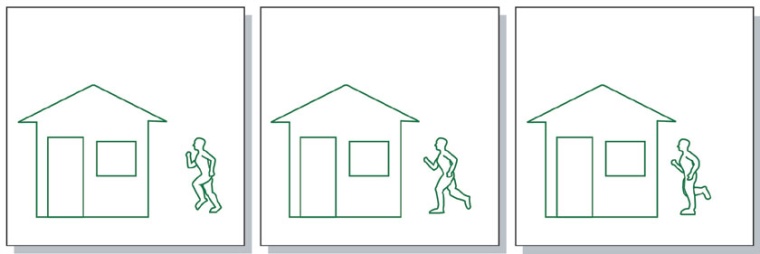

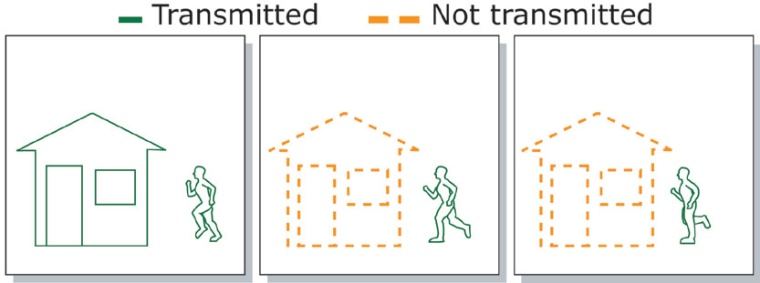

Various methods can be used to reduce video data, for example by simply removing unnecessary information, reducing image resolution, or by such methods as difference coding, which is used by most video compression standards including H.264. Here a frame is compared with a reference frame (i.e. earlier I- or P-frame) and only pixels that have changed with respect to the reference frame are coded. This reduces the number of pixel values that are coded and sent.

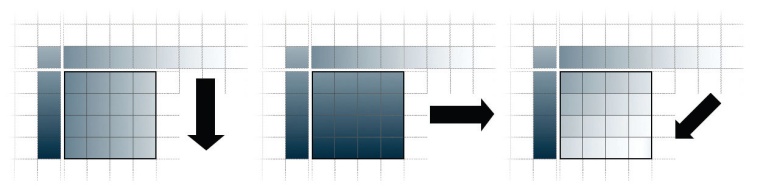

The amount of encoding can be further reduced if detection and encoding of differences is based on blocks of pixels (macroblocks) rather than individual pixels; therefore, bigger areas are compared and only blocks that are significantly different are coded. Block-based motion compensation divides a frame into a series of macroblocks. Block by block, a new frame can be composed or ‘predicted’ by looking for a matching block in a reference frame.

If a match is found, the encoder simply codes the position, the motion vector, where the matching block is to be found in the reference frame which takes up fewer bits than if the actual content of a block were to be coded.

Efficiency of H.264

H.264 introduces a new and advanced intra prediction scheme for encoding I-frames. This greatly reduces the bit size of an I-frame and maintains high quality by enabling the successive prediction of smaller blocks of pixels within each macroblock in a frame. This is done by trying to find matching pixels among the earlier-encoded pixels that border a new 4x4 pixel block to be intra-coded.

By reusing pixel values that have already been encoded, the bit size can be drastically reduced. This new H.264 technology has proven to be very efficient. Block-based motion compensation – used in encoding P- and B-frames – has also been improved. An H.264 encoder can choose to search for matching blocks – down to sub-pixel accuracy – in a few or many areas of one or several reference frames. The high degree of flexibility in H.264’s block-based motion compensation pays off in crowded surveillance scenes where the quality can be maintained for demanding applications.

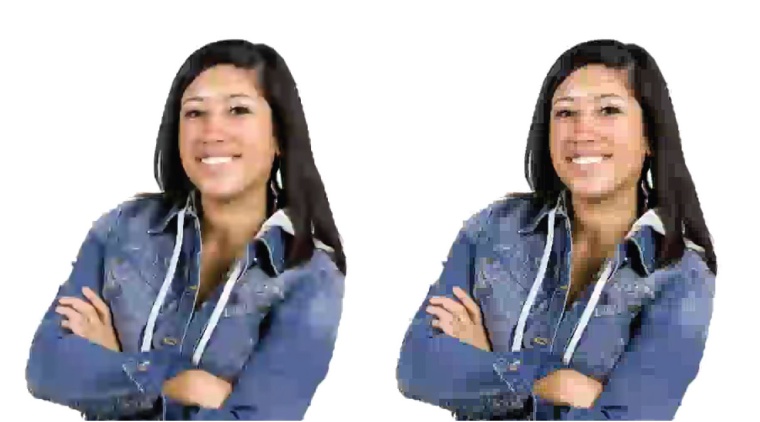

With H.264, typical blocky artifacts can be reduced by using an in-loop deblocking filter. This filter smoothes block edges using an adaptive strength to deliver an almost perfect decompressed video. In the video surveillance industry, H.264 will most likely find the quickest uptake in high frame rate and high resolution applications, like the surveillance of highways, airports and casinos where the use of 30/25 (NTSC/PAL) frames per second is the norm.

This is where the economies of reduced bandwidth and storage will deliver the biggest savings. H.264 is also expected to accelerate the adoption of megapixel cameras since the highly efficient compression technology can reduce the large file sizes and bit rates generated without compromising image quality. There are tradeoffs, however – H.264 will require higher performance network cameras and monitoring stations.

Contact:

Dominic Bruning

Axis Communications,

Preston, United Kingdom

Tel. (direct): +44 1462 427 910

Mobile: +44 7990 597 903

dominic.bruning@axis.com

www.axis.com

most read

Safety and Security in an Emergency: How companies take responsibility with strategic personal protection and amok prevention

Personal protection & amok prevention: strategic concepts, training & responsibility for corporate safety and security

Is Your Venue Ready for Martyn’s Law?

Martyn’s Law demands stronger security by 2027. Is your venue prepared to protect and respond?

Assa Abloy's battery-powered Aperio KL100 secures lockers

Boost workplace security and operational flexibility by securing more than just doors.

GIT SECURITY AWARD 2026 - The winners have been announced!

GIT SECURITY AWARD 2026: The best safety and security solutions of the year - now an overview of all winners

Integrated and Futureproof: Traka’s Next Chapter

Interview with Stefni Oliver on Traka’s Vision for the Future